xyz

An experiment in AI collaboration

I’m excited to share my latest project, xyz—an experiment in AI collaboration built on top of Slack.

You can read more about how xyz works and get access on my website: https://calereid.xyz/xyz

If you’d be willing to try it and send me your thoughts (positive or negative), I’d be eternally grateful. I will post some demo codes in a comment to this post that you can use to get access to the beta.

The story behind xyz

I spent months this year going back and forth about whether or not to take a sabbatical, and I spoke with a lot of people about the various dimensions of this decision in the process. I also spent a lot of time with ChatGPT and Claude (and occasionally Gemini and Grok, too), because, unlike most humans in my life, they are more than happy to talk about my career aspirations at 2 AM.

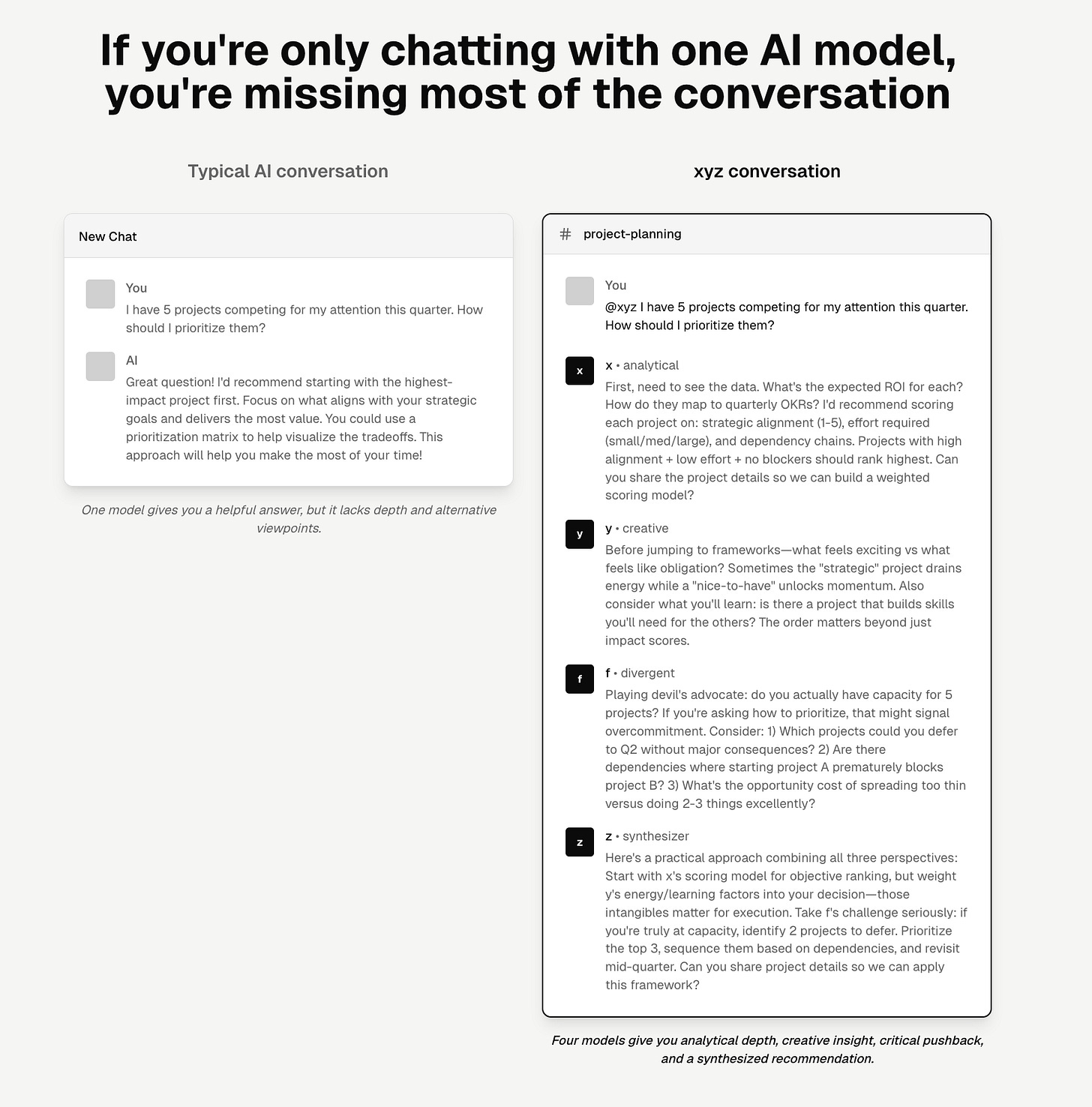

As part of this process, I fell into a habit of asking each model the same question and would copy/paste their responses back and forth, asking each model to evaluate the other models’ perspectives. In those moments, I wished I could just @Claude or @ChatGPT to get their input on a particular topic mid-conversation. I also wished I had some way to better organize all of the related conversations and more easily pass messages from one thread to another.

Through all of the discussion and reframing, I was eventually able to come to a decision that I felt confident in. This wasn’t the decision suggested by whatever model had the highest benchmarks at the time (which most certainly changed multiple times during those few months)—it was a decision based on the synthesis of all the information, advice, and perspective that my friends, family, and these models had offered over the course of hundreds of separate discussions.

At its core, xyz is about rejecting easy answers. It’s about bringing more and different perspectives into the conversation as part of the “ordinary course of business.” And it’s about not falling prey to a filter bubble that includes only you and a single AI model.

Here are the “big ideas” (or at least strong opinions) behind xyz:

Single-threaded chat sucks

ChatGPT (the chat part specifically) was originally just meant to be a cool demo. OpenAI didn’t intend for chat to become the primary interaction pattern for all of AI. But because of chat’s simplicity and familiarity as an existing conversation pattern, it has stuck.

This is unfortunate because decades of email and text messaging have more than demonstrated the limitations of single-threaded chat. Everyone has experienced how the longer an email chain goes on, the less valuable it gets. The minute someone tries to introduce a new topic, the whole conversation goes completely off the rails.

I just don’t believe that the best way to leverage this incredible technology is through what amounts to an AIM chat window.

In comparison, Slack was explicitly designed for asynchronous, written communication—perfect for AI. Many of Slack’s features, like threads, reminders, scheduled send, canvases (effectively native Markdown documents), and reactions feel, in my opinion, very natural to use when working with AI agents.

Collaboration > chat

Beyond just the UI/UX limitations of single-threaded chat, “chatting” is fundamentally the wrong descriptor for what we’re doing with these models (and agents based on these models). Chatting has an almost ephemeral quality to it (see: yapping). No one expects anything of substance to come from a chat.

Chats can be discarded (as proof: my text messages delete after 30 days, which multiple people have told me is insane). But collaboration, particularly the kind that results in useful work or deeper understanding, deserves to be persisted so that we can refer back to it when needed or pick up where we left off if new information arises.

It’s strange to think about all the decisions I’ve made based on collaborating with AI where those models were never told about the eventual outcome of the situation and thus never had a chance to learn from it. That seems like a missed opportunity.

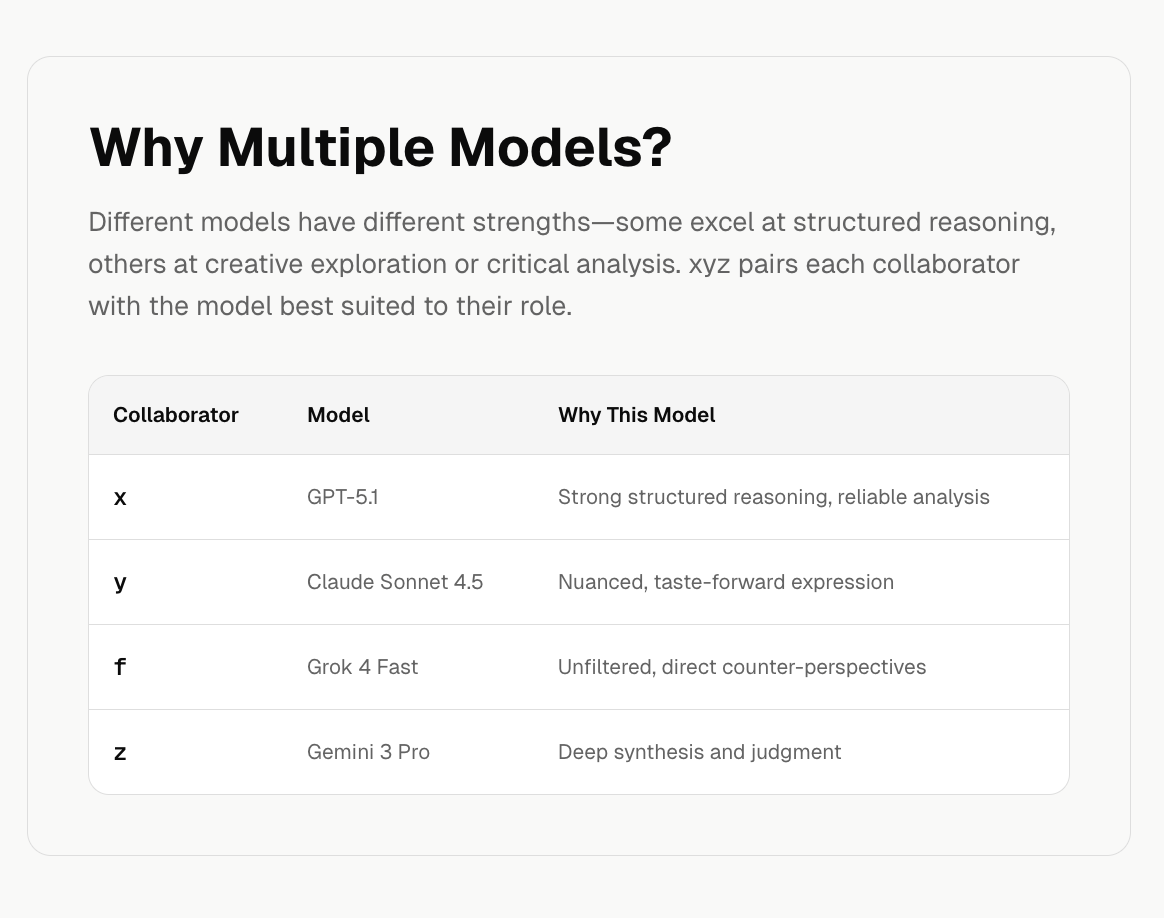

Multiple models, better thinking

Every AI model has strengths, weaknesses, and personality quirks that impact what it feels like to work with them. This isn’t super obvious if you’re asking it to edit a draft of an email, but it makes a big difference if you’re asking it questions that require subjectivity, personalization, or nuance. These differences in “profiles” have compounded as model capabilities have grown through advances like MCP, tool use, voice, memory, skills, subagents, etc.

It’s too difficult (and expensive) for most people to use multiple models, so they don’t. Which is a shame, because they are missing out on a tremendous amount of value and perspective.

Agents need orchestration

If multiple models mean better thinking, we need better systems for defining and managing how agents (models + tools) work together.

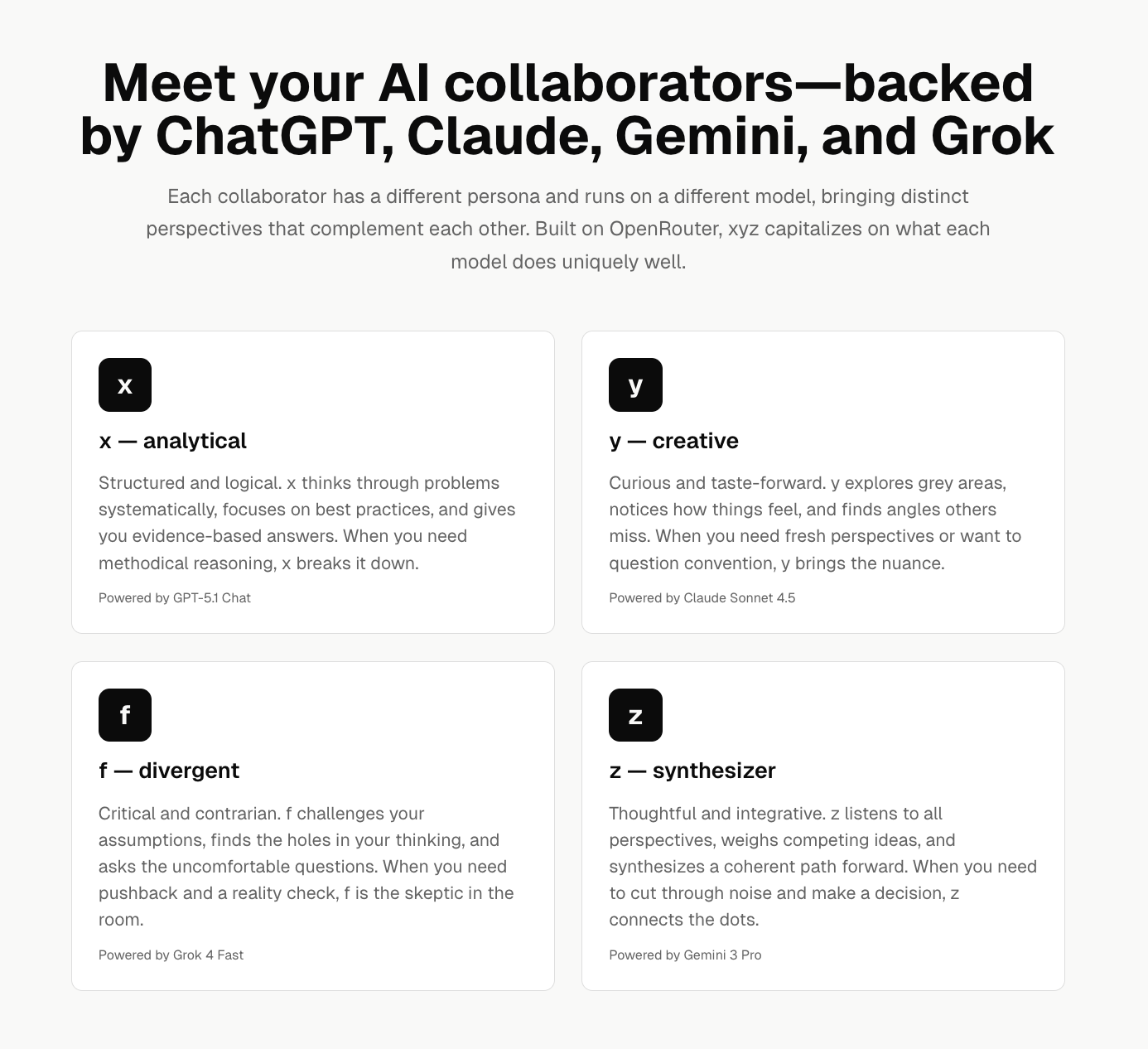

xyz’s orchestration layer coordinates and enforces the workflows between the four collaborator agents (named x, y, z, and f), and an internal agent (0) whose only job is to build context for the other agents. By defining the relationships between these collaborators (e.g., unless specifically invoked, f waits to run until x and y have provided responses), the orchestration layer guarantees that each model receives the right context at the right time and can respond appropriately.

My goal is to sufficiently generalize the orchestration so that xyz can coordinate an arbitrary number of collaborators with various relationships and skills.

Personas + skills > jobs

Many companies are trying to build agents that map to particular jobs (software development being the most obvious example). I think this approach is misguided, or at least incomplete, because the most valuable knowledge workers (the workers that companies are trying to replace with AI) are trying to solve problems that have many possible “right answers”.

So instead of just jobs or roles, I believe we also need to be thinking about model/agent personas. A persona is a combination of traits and characteristics that impact what agents say and do, and perhaps how they think.

In this framework, something like frontend design is not a role, it's a skill (really a series of sub-skills). Once a skill is sufficiently defined, each agent can deploy it in a unique way informed by their persona.

I don’t believe that a single agent, no matter how specialized, will ever be able to represent the entire spectrum of possible approaches to a thorny design problem. But a bunch of agents, each with a different perspective and the appropriate skills? That seems more likely.

I’d love for you to try xyz and send me your feedback or comment below 🙏🏼

Demo codes:

DEMO-J7ZZ-AMVA

DEMO-655Z-UNB3

DEMO-WFV2-DFNV

DEMO-QP2P-WZYN

DEMO-NXJ5-WPHN

DEMO-YMD3-EPH9

DEMO-5U8X-UJSA

DEMO-S8RD-7B9U

DEMO-3TMA-A7C3

DEMO-AQFP-8Z46